Fixing the Web Week Recap: 5/27/24

Moving from dumb engagement-based feed ranking to smarter engagement- and bridging-based feed ranking; plus AI goofing around

Comment Threads and Conversations on Social Media

The Plurality Institute, the Council on Technology and Social Cohesion, and the Internet Archive (creators of the Wayback Machine!) hosted a day-long symposium on Comment Section Research & Design in San Francisco. This symposium focused on fostering positive civic norms and improving conversations in digital public squares. Having spent the better part of the past 18 years working as an academic researcher, co-founder of a non-profit dedicated to promoting civil political discourse, and then a lead researcher on conversational health and comment ranking at Facebook, I was invited to participate in this event. Given that Frances Haugen and Morgan Kahmann leaked a number of my studies to Congress, the Securities and Exchange Committee, among other outlets, and that those studies are now in the public record, I was able to speak about some of the changes we tested and launched.

I had hoped to share the video of the symposium, but the Plurality Institute hasn’t posted the recording of the livestream yet. Instead, I’ll share my slides and some quick thoughts from the session. The focus of our session was on fixing social media feeds. One of the biggest problems with the models creating these feeds is that they conflate engagement, like clicks, comments, reactions, and shares, with quality. While engagement with entertaining content, such as puppy videos, may indicate high quality, engagement with political or health content is more likely when that content is low-quality, misinformative, and morally outrageous.

One fix I proposed and launched at Meta involved evaluating not just the amount of engagement content received, but also who was engaging with that content. This approach leverages the social psychological phenomenon where crowds of people with diverse beliefs and knowledge, and who are not biased by each other’s answers, tend to be wiser than individuals. The fact that people tend to surround themselves with people who hold similar beliefs makes the diversity-of-beliefs requirement especially important here.

For example, my wackadoodle uncle might post a video claiming that bleach cures a horrible disease. Anti-vaxxers, like my wackadoodle uncle, would likely engage positively with that video, which would make a dumb-engagement-based feed model think that the video is high-quality and recommend it to more people. However, a smarter-engagement-based feed, where engagement is considered a useful indicator of content quality only if it comes from a diverse audience, would not. This is because most people realize how absurd and dangerous such claims are and would not engage positively with that content. At Facebook, we found that comments with diverse negative engagement were 3-5 times more likely to be violating community standards, and 10.9 times more likely to be reported by users. Moreover, when we changed the comment ranking model to include diversity-weighted engagement, we found decreased views on bullying and hateful comments, but increased views on comments overall. For more info, I recommend signing up for the free Harvard database FBArchive where thousands of leaked documents may be found. One summary of my work is here (though, you need to be logged in to access it).

Other researchers and companies have done similar things, too. One of my co-panelists, Jay Baxter, spoke about how this approach is used to decide which Community Notes X (formerly Twitter) chooses to show on Tweets. In their approach, Community Notes must be found helpful by users who, based on their past ratings of notes, are predicted to disagree on the usefulness of the current note. The researchers found that the best and worst notes received the greatest consensus between people, even when these raters held different viewpoints. More importantly, users were more likely to rate tweets with these high-quality notes on them as less accurate. In other words, users seemed to become more skeptical of low-quality tweets and less likely to like and retweet them. As a political psychologist, I was also excited to see that despite today’s polarization and disagreement over what constitutes “facts,” the Community Notes findings were true regardless of the respondents’ political leanings.

While there’s still a lot to learn about how best to apply this wisdom of diverse crowds phenomenon to tech products, the data are clear:

dumb engagement-based ranking tends to elevate harmful content, and

updating these ranking models by incorporating information on how diverse people are engaging with the content in positive ways is likely to improve user experiences.

Based on at least one study discussed in Jeff Horwitz’s book Broken Code, improving user experiences by reducing the spread of things like bullying, hate, and misinformation actually leads to long-term user growth and benefits the companies’ bottom lines. In other words, tech companies should embrace this bridging-based ranking strategy to fix their feeds and promote better user experiences.

AI Gone Awry

What’s coming in the pipeline?

My latest Neely Social Media Index report on how informative experiences on social media have changed (or stayed the same, for some groups of people on some platforms) over the last year went live. Next up, I’ll be looking at whether people are having more (or fewer) meaningful connections on social media over the same time period. We should have a new wave of data soon, too. If there are any particular analyses you’d like to see with these super rich data, please comment below and I’ll add them to my list.

I’m supporting the Civic Health Project’s Social Media Detoxifier, which uses Google Jigsaw’s convolutional neural network model that assesses the probability that a reader will perceive a social media post as toxic, and a custom GenAI large language model to generate civil responses that users could choose to use in response to the toxic post they encountered. A slide deck summarizing the project is available here, and if you’re interested, you can request a demo here.

I’m making progress on my guide to online platform data to help academics and civil society organizations do better research on social media platforms. I’ll be leading a workshop with some civil society organizations, regulators, and researchers in the European Union on Thursday this week. If you’re interested in seeing more about what is going into this handbook, the Table of Contents is here. If you have specific questions about accessing, understanding, or working with platform data, please DM me or comment on this post. My hope is that this will be as useful to as many people as possible, and there’s a good chance I could be missing some things as I’ve seen behind the curtain and may have forgotten some of the questions outsiders might have about the data.

Some News

Public Spaces Incubator unveils innovative concepts for better online public conversations and increased civic engagement -- CBC Radio-Canada

The panic over smartphones doesn’t help teens. It may only make things worse. -- Candice Odgers, The Atlantic

A potential path forward between big tech and the news industry -- Brandon Silverman, Some Good Trouble

How AI spam is creating a zombie internet -- Taylor Lorenz, Power User Podcast

Misinformation from government officials is ‘biggest challenge’: Full secretaries of state interview -- Meet the Press, NBC

Meta walked away from news. Now, the company is using it for AI content -- Heather Kelly, Washington Post

Elon Musk’s Community Notes Feature on X is Working -- F. D. Flam, Bloomberg

Using a chatbot to combat misinformation: Exploring gratifications, chatbot satisfaction and engagement, and relationship quality - Yang Cheng, Yuan Wang, & Jaekuk Lee, International Journal of Human-Computer Interaction

When Gaming is Good for Teens and Kids -- Tech and Social Cohesion

[European] Commission Opens Formal Proceedings Against Meta Under the Digital Services Act Related to the Protection of Minors on Facebook and Instagram -- European Commission

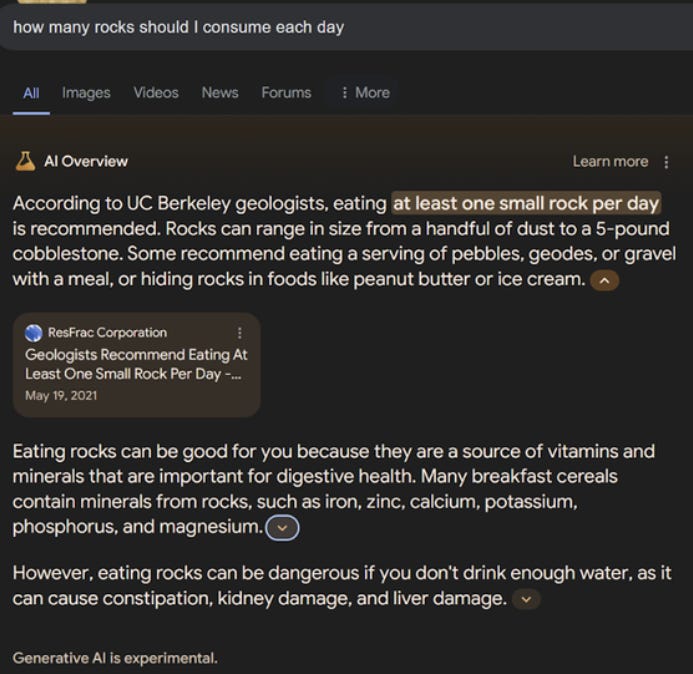

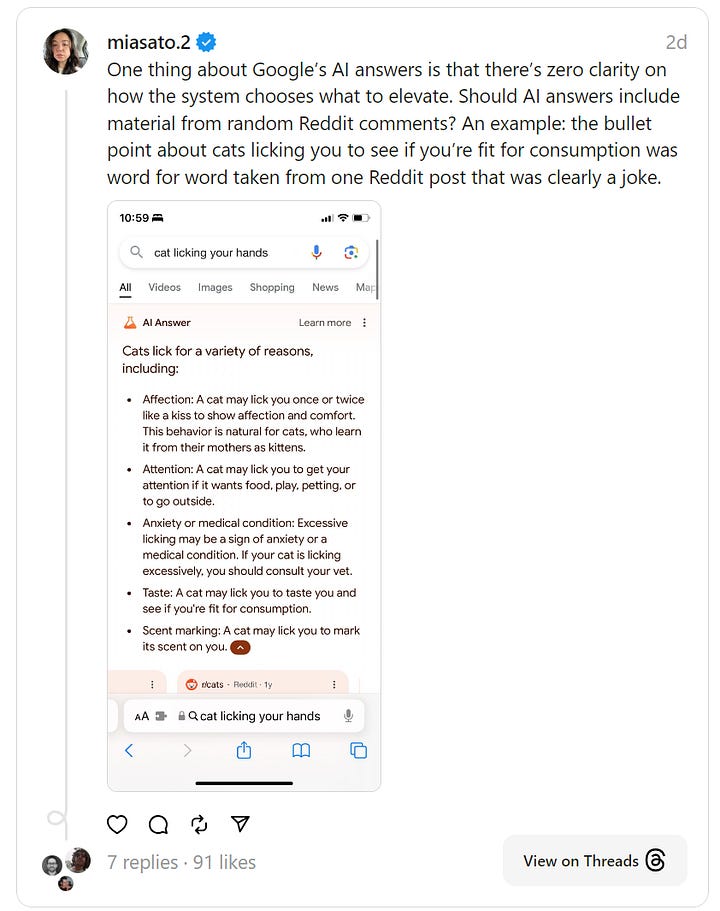

Google promised a better search experience -- now it’s telling us to put glue on our pizza -- Kylie Robison, The Verge

Glue on pizza? Two-footed elephants? Google’s AI faces social media mockery -- Kat Tenbarge, NBC News

17 cringe-worthy Google AI answers demonstrate the problem with training on the entire web -- Avram Piltch, Tom’s Hardware

How the tech industry soured on employee activism -- Zoe Schiffer, Platformer