Fixing the Web Week Recap: 5/20/24

My meeting with key stakeholders responsible for enforcing the Digital Services Act regulating tech companies and related civil society organizations.

I am writing from the seat of the European Union in Brussels, Belgium. After 15 years studying social media, 4 years working in social media, and the past year-plus trying to fix social media from the outside, I was invited for an action-packed week with key stakeholders responsible for enforcing the expansive Digital Services Act regulating tech companies.

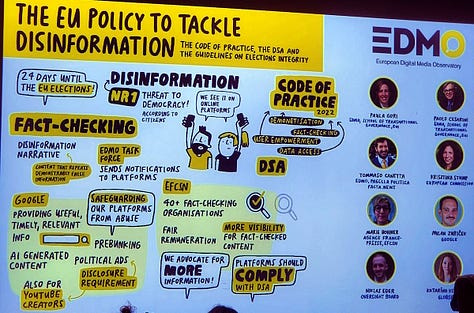

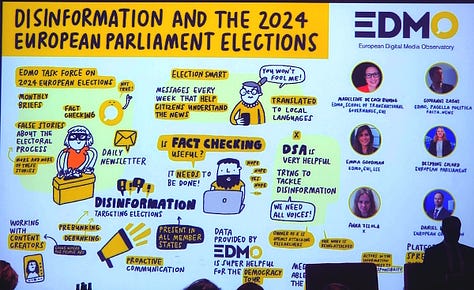

European Digital Media Observatory

The week began with the annual European Digital Media Observatory conference, which centered on the potential impacts of artificial intelligence and social media on the EU’s first parliamentary elections since 2019. The top fears raised by researchers were deepfake videos released on the eve of the elections, as more people have access to increasingly believable video-creating generative AI tools and can post these creations onto social media platforms that are unlikely to be able to verify whether the videos are real before distributing them to billions of voters. While experimental research on the impact of social media and deepfakes on elections is unclear, it’s fair to say that recommending videos showing people doing objectionable things they never did is… not great.

Another major theme, and the reason for my invitation, was data access and platform transparency. Specifically, the Digital Services Act requires platforms to share data with key stakeholders and researchers. Despite this requirement, many platforms have actually been reducing access to their data. For example, Meta announced it was shutting down CrowdTangle, which was the best tool to track in real-time what news Facebook and Instagram were amplifying to their billions of users. Although Meta did announce creating another tool, almost nobody in the large audience who previously had access to CrowdTangle was granted access to it (myself included). And, X (formerly Twitter) now charges around $500,000 per year for access to less data than they used to offer for free, effectively putting X data out of reach for most academic and non-profit researchers (again, myself included). The Digital Services Act requires data access, which is the basis for some of the current investigations into these companies. If found non-compliant, they could be fined up to 6% of their annual global revenue. For Meta, that fine would be a cool $8,040,000,000 (based on 2023 revenue).

After the conference, EDMO hosted representatives from the 27 EU member states’ hubs for a discussion around platform data. They invited me to speak about what data tech companies collect about their users, how the data are structured, and what researchers and regulators might need to ask for when requesting data. In preparing this presentation, and through many conversations over the course of the week, it’s clear to me that explaining this to people who haven’t worked on the technical side of these things in these companies is sorely needed. So, I’ve begun working on an interactive e-book on social technology data practices, and how outsiders can learn to work with the data that these companies are increasingly going to be required to make publicly available. For a brief summary, check out my slide deck here.

European Commission, Center for Democracy & Technology - Europe, Mozilla, and more

The Integrity Institute Research & Policy team and I had many other meetings with key partners like the European Commission, the Center for Democracy & Technology - Europe, and the Mozilla Foundation. While I can’t yet share too much about those conversations, I will highlight three observations:

The European Commission has an incredibly sophisticated group of experts working on all-things-Digital-Services-Act-related. The expertise was striking to me, as most of my prior advising and consultation was with United States-based elected officials and staff, who historically have not been particularly experienced in how the latest technology works (or fails to work). However, I have seen improvements on the US side, especially in the US Senate Judiciary Hearings on Child Safety earlier this year, and I am optimistic that the improvement will continue thanks to the federal push to hire more technologists into the public sector and programs like TechCongress.

The European Union is way ahead of the US when it comes to tech regulation, and should provide many lessons to the rest of the world as they move toward regulating an industry that has operated without much oversight for the past few decades. It’s too early to say whether the DSA will be effective in reducing harms caused by / related to social technologies, but we will start to see some of the effects in the upcoming months as companies’ risk assessments are released to the public (ETA late August 2024) and their data transparency programs become more accessible. Regardless, this is the most far-reaching tech regulatory policy in the world and should be incredibly informative.

Much like in the US, there are some excellent civil society and non-profit organizations working to create a safer and more useful social internet. Much gratitude to the Center for Democracy & Technology - Europe and the Mozilla Foundation for their important work.

All in all, it was a great trip, and I may be heading back later this year to lead workshops on how to understand and work with online platform data.

What’s coming in the pipeline?

My next research report on the nationally representative, longitudinal Neely Social Media Index survey of US adults for the University of Southern California’s Neely Center will be landing this week. This report examines whether users are learning more (or less) important and/or useful information on social media and communication services than they were 1 year ago. Be on the lookout; it’s a fun one.

With the release of Jon Haidt’s Anxious Generation and Pete Etchells’ Unlocked: The Real Science of Screen Time, there are lots of strong claims being made about how screen time and social media may be harming people. Having spent 4 years working in tech on some of these issues, including advising the US White House Coronavirus Task Force during the pandemic, and more recently advising a number of State Attorneys General in their cases against some platforms regarding harms to their users, I feel compelled to chime in here. I’ve got some great guests lined up for what will likely turn into a multi-part series.

I’m putting together a guide to working with platform data to help academics and civil society organizations do better research on social media platforms. This will take a long time, but I’ll share chunks of it as they come together. If you have questions or requests, please do reach out. I want to make this as useful for as many people and purposes as I can. [WIP slide deck with 30,000 foot summary]

Some News

What universities might learn from social media companies about content moderation [and free speech] — Daniel Kreiss & Matt Perault, Tech Policy Press

Election officials are role-playing AI threats to protect democracy — Lauren Feiner, The Verge

The effects of Facebook and Instagram on the 2020 election: A deactivation experiment — Hunt Allcott et al., Proceedings of the National Academy of Sciences

Platforms’ election interventions in the global majority are ineffective - Mozilla

Tech firms told to hide ‘toxic’ content from children — Tom Singleton & Imran Rahman-Jones, BBC

The AI Index Report: Measuring Trends in AI — Stanford Institute for Human-Centered Artificial Intelligence

Prepare to get manipulated by emotionally expressive chatbots — Will Knight, Wired

Maven is a new social network that eliminates followers—and hopefully stress — Matthew Hutson, Wired

Billionaire Frank McCourt Assembles “People’s Bid” to Buy TikTok — Dan Primack

OpenAI’s Long-Term AI Risk Team Has Disbanded — Will Knight, Wired

US Senate AI Report Meets Mostly Disappointment, Condemnation — Gabby Miller & Justin Hendrix, Tech Policy Press

Platform Accountability and Transparency Act, S. 1876, 118th Cong. — Harvard Law Review

Microsoft’s AI chatbot will ‘recall’ everything you do on its new PCs — Associated Press

That’s all for this post, but if you enjoyed it please consider subscribing and sharing with your friends and family. Thank you.